Feature Story

More feature stories by year:

2024

2023

2022

2021

2020

2019

2018

2017

2016

2015

2014

2013

2012

2011

2010

2009

2008

2007

2006

2005

2004

2003

2002

2001

2000

1999

1998

![]() Return to: 2018 Feature Stories

Return to: 2018 Feature Stories

CLIENT: HIGHWAI

Sept. 10, 2018: Embedded Computing Design

About six years ago, there was a major shock in a somewhat obscure corner of the computing world when a team from the University of Toronto won the Imagenet Challenge using a convolutional neural network that was trained, rather than designed, to recognize images. That team, and others, went on to not only beat out the very best detection algorithms but to outperform humans in many image classification tasks. Now, only a few years later, it seems that deep neural networks are inescapable.

Even in 2012 machine learning was not new and in fact pretty much all classification software up to that point used some training. But they all depended to some degree on human-designed feature extraction algorithms. What made this new solution - later dubbed AlexNet after the lead researcher - different was that it had no such human-designed algorithms and achieved its results purely from supervised learning.

The impact of this revelation on the entire field of computing has already been huge in areas very far removed from image classification and the changes it has brought are predicted to be even more profound in the future as researchers learn how to apply deep learning techniques to more and more problems in an ever-growing number of fields. Enthusiasm for deep learning has even led some commentators to predict the end of classical software authoring that depends on designed algorithms, replaced by networks trained on vast quantities of data.

This vision of software solutions evolving from exposure to data has some compelling aspects: training by example offers the possibility of a true mass manufacturing technique for software. Currently, software manufacturing is in a pre-industrial phase where every application is custom designed, rather like coach-built automobiles. With a standard algorithmic platform (the network) and automated training environments, deep learning could do for software what Henry Ford did for automobile manufacturing.

Whether or not you agree with this vision, the key feature of deep learning is that it depends on the availability of data, and, therefore, domain specific expertise becomes less important than ownership of the relevant data. As expressed by deep learning pioneer Andrew Ng: “It’s not the person with the best algorithm that wins, it’s the person with the most data.” This is the central problem being faced by companies wanting to transition to the new paradigm: where do they get the data?

For companies that depend on online behavioral data, the answer is obvious and the recording, tracking and re-selling of all our browsing habits is now so ubiquitous that the overhead of it all dominates our online experiences. For companies that deal more closely with the real world, the solutions are less convenient. Waymo, the best-known name in autonomous vehicles, has addressed this problem by deploying fleets of instrumented cars to map out localities and to record real world camera, radar and other data that it then feeds into its perception software. Other players in that space have followed suit in a smaller way but even Waymo, with millions of miles driven and vast amounts of data available to it, finds it inadequate for the task.

To begin with, not all data is equal: to be useful it must be accurately and thoroughly annotated and that remains an expensive, error prone business even today. After several years of efforts to automate the process, Amazon’s Mechanical Turk is still the go-to method of annotating data. As well as being annotated, to be useful, data must be relevant and that is a major problem when relevance is determined by how uncommon, dangerous or outright illegal any given occurrence is. Reliable, relevant ground-truth data is so hard to come by that Waymo has taken to building its own mock cities out in the desert where it can simulate the behaviors it needs under controlled conditions.

But in a world where Hollywood can produce CGI scenes that are utterly convincing, it must be possible to use that sort of capability to create training data for real world scenarios and, of course, it is. The industry has been moving in this direction for a few years with one team of researchers developing a method for annotating sequences from Grand Theft Auto. And Udacity has an open source project for a self-driving car simulator as part of its self-driving car nanodegree.

Like the Udacity example, most of the available simulators are aimed at implementing a verification loop for testing trained perception stacks rather than producing data primarily intended for the training itself. Those data simulators that do exist are closely held by the auto companies and their startup competitors, demonstrating the fundamental value of the data they produce.

So, is it true that synthetic data can be successfully used to train neural networks and how much and what sort of data are needed to do the job?

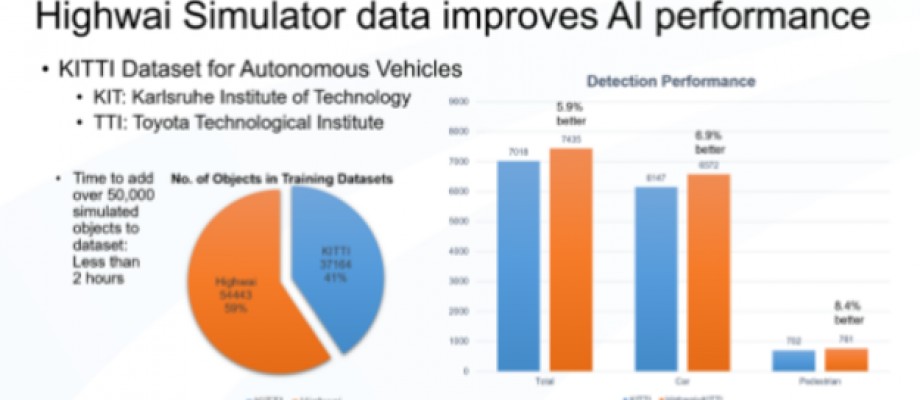

Palo Alto-based Highwai has published the results of its pilot study that uses the KITTI data set as a jumping off point to examine the gains that are possible with a completely synthetic data set used to augment the annotated images available from KITTI.

The training images were produced using Highwai’s Real World Simulator and include a number of sequences taken from downtown urban and residential suburban scenes populated with a variety of vehicles, pedestrians and bicycles. The purpose was object detection and classification rather than tracking so capture frame rate was set low to enable the capture of a wide variety of images while keeping data set size moderate. Images were captured over a range of conditions, including camera height and field of view, lighting and shadowing variations due to time of day and atmospheric effects such as fog and haze. Although Highwai’s tools support LIDAR, only visible-light camera data was captured in this instance. Annotations included categories such as “pedestrian,” “car” and “bicycle” and a screen-space 2D bounding box was the only type of annotation used.

The data was prepared for training using Highwai’s Data Augmentation Toolkit to add camera sensor noise, image compression noise, to add ‘distractor’ objects to the images and to desensitize the training to color. At the end of this process, the total size of the synthetic data set was 54,443 objects in 5,000 images. (this compares to 37,164 objects and 7,000 images in the original KITTI dataset). The total time taken to produce the data, augment it and add it to the training data set was under two hours.

The base network used was a Faster RCNN Inception Resnet pretrained as an object detector on the Common Objects in Context (COCO) dataset and supplementary re-training was done twice; first using only the KITTI dataset to produce a baseline and then with the KITTI and Highwai synthetic data sets combined. Testing was done on the KITTI reference test dataset, which contains only real-world images and showed significant gains in performance between the KITTI-only, and KITTI plus synthetic training. The addition of the synthetic data increased recognitions by 5.9 percent overall, with detection of cars and pedestrians improving significantly more – a result that is unsurprising since the Highwai synthetic dataset concentrated on those object types.

The question of how much training data are needed has no good answer but Highwai points to highly targeted data curation as essential to keeping this within reasonable bounds. A good example is a dataset they created for an undisclosed object-detection project where the total amount of image and annotation data actually used for training came to about 15GBytes. An initial total of approximately 12,000 images containing around 120,000 annotated objects was auto-curated down from an original set of 30,000 images and 500,000 annotated objects.

Results like these are important to independent software makers as well as to system integrators and OEMs. Sure, they can use Amazon’s services to help train networks but if the value is in the data, then commercial viability demands that they are able to create IP in that area – they must be able to create their own training data using their own domain expertise to specify, refine and curate the data sets. This means that the emergence of a tools industry aimed at the production of such IP is a significant step and one that will be welcomed. We can expect to see a rapid development of expertise in the use of synthetic training data and equally rapid development in the tools to produce it.

Peter McGuinness is CEO and co-founder of Palo Alto, CA-based Highwai, a privately held company that develops and markets simulators to train neural networks for very high accuracy object recognition. Contact Mr. McGuinness at peter@highwai.com.

Return to: 2018 Feature Stories