Feature Story

More feature stories by year:

2024

2023

2022

2021

2020

2019

2018

2017

2016

2015

2014

2013

2012

2011

2010

2009

2008

2007

2006

2005

2004

2003

2002

2001

2000

1999

1998

Return to: 2013 Feature Stories

Return to: 2013 Feature Stories

CLIENT: SUVOLA

September 2013: Pipeline

Advancements in chip technology have brought about the dawn of the age of the microserver. This next generation of server technology is poised to more effectively tackle the issues of cost performance, reliability, space, and power consumption.

Advancements in chip technology have brought about the dawn of the age of the microserver. This next generation of server technology is poised to more effectively tackle the issues of cost performance, reliability, space, and power consumption.

Current information-technology systems based on Hadoop, MongoDB, Cassandra, and other products that are designed to manage Big Data require significant parallel processing power to get through extremely large volumes of data required to gain tactical and strategic business insight. These systems require dozens of, if not many more, commodity servers to do the job. Primarily based on x86 CPU technology such as Intel Xeon or AMD Opteron microprocessors, these solution can be extraordinarily large, power hungry and challenging to manage because they’re made up of thousands of discrete components, interconnects, cables, and individual chassis. As a result reliability and continuous availability can be difficult to maintain.

In contrast, microservers made possible by hyperscale- integration and system-on-a-chip (SoC) designs provide enterprise-class servers on a single substrate. These next-generation servers come with high-performance, ARM-based CPU cores, a disk controller, and memory management, making them ideal for massively parallel applications in Big Data. Deployed in high-density chassis, SoC designs require far fewer components than current IT systems, provide integrated, high-speed fabric networks and greatly reduce space and power requirements. By using advanced SoC technology, Big Data systems can be deployed on microserver clusters with significantly high cost performance.

Over the past few years several companies have entered the SoC market. The first to provide an SoC design for a generally available microserver platform was Calxeda: announced in November 2011, its EnergyCore ECX-1000 SoC is based on four ARM Cortex-A9 cores running at speeds of up to 1.4 GHz.

The design includes an 80 Gbps crossbar fabric switch capable of connecting up to 4,096 nodes, and five separate 10 Gbps external channels. It also provides support for up to five direct-attached SATA devices, four Gen2 PCI Express controllers and a wide collection of I2C, SPI, GPIO, and JTAG ports for system integration and management. These SoCs can operate on as little as 1.5 watts of power; combined with memory and solid-state storage, an entire server drawing less than 7 watts of power is possible.

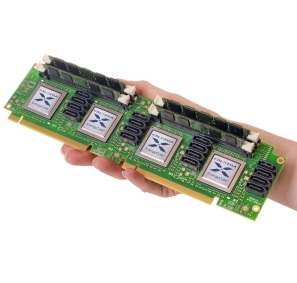

Another new player is AppliedMicro. In October 2011 the company announced its intention to be the first chipmaker to enter the market with a 64-bit ARMv8 core, branded X-Gene. Expected to run at 3 GHz, the X-Gene SoC is more power hungry than Calxeda’s ECX-1000 but still draws considerably less power than comparable x86 technology chips. AppliedMicro demonstrated a server with six X-Gene chips on a single motherboard at ARM TechCon 2012 last fall and expects to have samples in the hands of systems manufacturers later this year, with likely product availability in 2014. Calxeda is also working on ARM 64-bit architectures based on ARMv8 technology, and estimates that its products will be commercially available around the same time as AppliedMicro’s.

Other players in the SoC market include Marvell, which offers its own ARMv6/v7-based chip, dubbed the ARMADA XP Series SoC. The ARMADA provides four cores and clocks speeds of up to 1.6 GHz, but it only supplies four limited 1 Gbps network ports and two SATA-drive connections. Although this SoC is sufficient for network-attached storage and lightweight media servers, it’s unlikely to find a home in servers that manage Big Data applications.

AMD also recently announced a partnership with ARM to build 64-bit SoCs, putting AMD in the unique position of being able to offer x86, ARM and GPU technology in its chipsets. Combined with its 2012 acquisition of SeaMicro, a top company in the microserver-systems market, AMD is positioning itself as a potential future leader of both the SoC and microserver markets.

Intel is also attempting to position its Atom processor for the microserver market, but its chip appears to be more evolution than revolution. In fact, it’s not a true SoC, but a 2 GHz lower-power version of existing x86 architecture; the Atom does not feature integrated SATA or network capabilities or other features of true SoCs. That being said, Hewlett-Packard’s Moonshot platform was launched in April as a “microserver” based on Intel Atom chips rather than the Calxeda EnergyCore ECX-1000 chips demonstrated by HP in November 2011.

Nathan Brookwood, a research fellow at semiconductor consulting firm Insight 64, notes that while “some assume that a shift to ARM-based designs is needed to achieve the high processor density and performance-per-watt characteristics associated with the microserver category, Intel argues that its forthcoming 22-nanometer Avoton design can match or beat the metrics claimed by ARM- based competitors. This means the competitive situation in the emerging microserver market will heat up as 2014 progresses, with new 64-bit ARM fabric-based designs from suppliers like Calxeda and AMD, and new 64-bit x86 fabric-based designs from Intel. It is shaping up to be an interesting year.”

Although a variety of microserver chassis based on SoCs from multiple vendors are now available, few are suited to Big Data applications. The two notable exceptions are based on the Calxeda EnergyCore ECX-1000 and the Intel Atom (low-power microprocessor).

Microserver chassis designed for the Calxeda EnergyCore SoCs have been available since late last year from Boston Limited (Viridis) and Penguin Computing (UDX1). Available in 2U and 4U configurations, these systems can support up to 48 SoCs and 72 terabytes (TB) of storage, or, in a single chassis, a reduced SoC count but close to 240 TB of storage. With up to four external 10 Gbps Ethernet ports to expose the native Calxeda network fabric, the Viridis and UDX1 systems can be networked together to build Big Data machines that scale to petabytes using a fraction of the power and space of traditional technologies.

HP is now planning to release a true microserver system based on the next-generation Calxeda chips as part of its Moonshot series later this year, which will support up to 45 Calxeda quad-server cards. However, design specs indicate that only 1 Gbps Ethernet is available to each server card, which will severely limit the communications possible between SoCs designed by other manufacturers. For Big Data applications, the Viridis and UDX1 systems are far more suitable, as they take advantage of the native Calxeda network-fabric interconnect.

AppliedMicro, Marvell, AMD, and others don’t have available microservers based on their SoCs at this time. AppliedMicro has demonstrated servers in partnership with Dell, but according to Dell spokeswoman Erin Zehr at TechCon 2012, “We don’t have any plans to make generally available an ARM-based server right now—that includes the AppliedMicro-based prototype.” Expect to see early systems using AppliedMicro’s X-Gene chip as well as Marvell and AMD’s SoC chips sometime next year.

Software support is an absolute necessity for any server technology. Without the appropriate operating systems, resource management, security, and enterprise applications, next-generation microservers are nothing more than doorstops. Servers based on Intel’s Atom chips can run Linux, Windows and a variety of other operating systems and have no issues providing the necessary operating systems and software infrastructure for Big Data.

To date, only versions of Linux are available for the Calxeda ECX-1000 and other ARM-based microserver systems, notably Ubuntu, from Canonical, and Fedora, from Red Hat. Fortunately for Big Data, that’s not a problem: the vast majority of Big Data solutions were designed for Linux, largely due to its origins in the open-source community rather than the R&D groups of IBM, Microsoft or Oracle. With reasonable Java support from Oracle and the open- source community, the deployment of Hadoop, MongoDB, Cassandra, and other Big Data application stacks is possible.

“Microservers show great promise for leading cost performance on Big Data platforms, where the CPU is balanced with local commodity storage and network infrastructure commonly used in Big Data,” said Karl Freund, Calxeda’s VP of marketing. However, getting a build of a Big Data stack optimized for an ARM-based microserver is another story: many of the open-source projects that created the Big Data application stacks in use today were originally developed with large collections of commodity x86-based servers in the last decade or so, resulting in software architectures designed around the characteristics of the servers.

Microservers deployed with ARM-based SoCs have very different architectures and characteristics, ones that weren’t expected or anticipated by the designers of the Big Data applications that are popular now. In many cases these applications need to be modified to play to the strengths of each microserver architecture while minimizing the impact of the architecture’s weaknesses compared to traditional x86 technologies.

The major advantages of microservers include:

The major disadvantages include:

The disadvantages are, in general, short-term issues that are currently being remedied by the industry. Companies such as Suvola and Inktank are addressing the need for enterprise software. Clock rates will likely double in the next year or two. And CPUs capable of 64-bit addressing and larger memory will be available by 2014. As successful deployments of microservers occur over the next several years, a large, vibrant ecosystem of vendors and experts in the field will emerge.

Enterprises that are intending on adopting microserver technology in the near future will likely find that the lack of ported and tuned enterprise software is the most significant barrier to rapid adoption. Of course, the ones that develop a significant portion of their own software should be able to port their code to ARM-based microservers.

The key question then becomes expense versus benefits. In some cases porting challenges will favor enterprises that wait until 2014, when 64-bit ARM-based microservers will be more readily available. As previously mentioned, another challenge is the limited vendor support and overall limited amount of available expertise in the emerging microserver technologies.

Although current limitations on clock rates and 32-bit architecture will limit the application of ARM-based microserver technology in the near future, the availability of 64-bit SoCs in 2014 will usher in the dawn of the age of Big Data systems based on microserver technology. With partnerships already announced between major Big Data software vendors and the suppliers of microserver SoC technology, significant deployments of Big Data on ARM-based microservers should be in production by the end of next year. If industry analysts’ predictions hold true, as much as 20 percent of the enterprise information- technology infrastructure could be running on microserver technology by as early as 2016.

However, Jim McGregor, principal analyst at market- research firm TIRIAS Research, points out that the collection, management, analysis, and delivery of insights from Big Data go beyond the server platform. “Microservers are part of the solution, but not the entire solution,” he said. “Going forward, we will have to look at everything from consumer devices to the cloud as a distributed computing platform that must be designed as a complete solution.”

Regardless of the final form Big Data solutions take and where microserver technology is applied, expect Big Data to be a big part of the rapidly emerging microserver- technology ecosystem.

Return to: 2013 Feature Stories